This article recounts the story of how I became one of the wealthiest 100 players on a virtual betting site with over 10,000 active users. I was looking to sharpen up my JavaScript skills when I came across a mention on Hacker News of what turned out to be the perfect learning project opportunity: Salty’s Dream Casino.

Salty’s Dream Casino

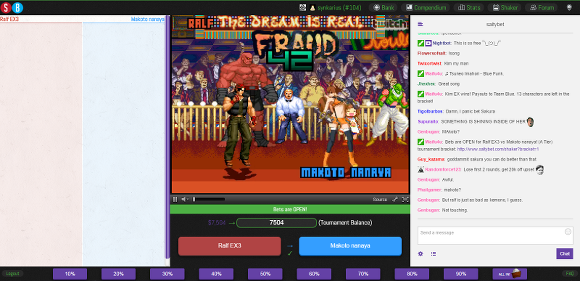

A little bit of background is in order. Salty’s Dream Casino, a.k.a. SaltyBet, is a website whose main feature is an embedded Twitch video window and chat. The video window shows a video game called M.U.G.E.N. running 24/7. The game is a fighting game, and if you sign up for an account on SaltyBet.com, you are given 400 “salty bucks” and can bet on who will win the fights. It’s all play money of course, and if you run out, you automatically get a minimum amount so that you can continue betting. There are no human players controlling the characters; they are computer-controlled, some with better or worse AI. (Some have laughably bad AI, but that’s part of the fun.) The characters are all player created and there are over 5000 of them spanning over 5 tiers: P, B, A, S, X.

What I saw in SaltyBet was fast iteration for development (most matches are over in 1-2 minutes, the perfect amount of time to fix the logic and come back), a fun project, and data that would translate well into features for some of the machine learning algorithms that I’d been studying.

My Short Trip to the “0.1%”

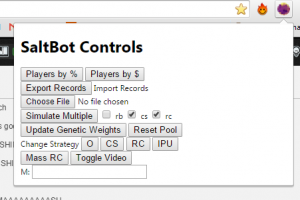

As I would be needing to inject JavaScript into the site, I decided to go the route of a Google Chrome extension. I didn’t know anything about browser extensions at the time, so that would also be a great learning opportunity. The initial step was to create a basic runtime that ran in parallel to the SaltyBet fighting match cycle. I set it up: the very first version of the bot picked a side randomly and bet 10% of total winnings.

Happy that I’d gotten the extension working, I left it on overnight. When I woke up, my ranking was #512 out of 400,000 total accounts, with $367,000. As I suspected, this was just a great stroke of luck. When I got home that day, the bot had pissed away most of the money.

The Progression of Strategies

In order to create strategies any more advanced than a coin toss, I would need to collect data. I implemented some basic stat collection using Chrome Storage, as well as records import and export, then got to work on the first real strategy, “More Wins”. As its name implies, it simply compared wins and losses in order to determine which character to bet on. If there was a tie, it resorted to a coin toss again.

I plugged in RGraph to see just how much better “More Wins” was doing than a coin toss. I was dismayed to see that although the coin toss strategy had the expected 50% accuracy rate, “More Wins” was at 40%! After modifying the bot to print out its decision-making logic at betting time, I realized that (A) lots of matches were being decided by coin tosses, and more importantly, (B) lots of bad calls were being made because I didn’t have enough data to effectively compare wins and losses. For example, if there were a matchup between a very strong character whom I didn’t have any data on, and a relatively weak character with one win and a bunch of losses, the loser’s single recorded win would trump the zero recorded wins of the champ.

(At this point, of course I could have signed up for a premium account and gotten full access to character statistics, but where’s the fun in that? Besides, I wanted my bot to be able to work with limited data, since premium accounts really just had a larger amount of limited data.)

My solution was to create “More Wins Cautious”, which would only make a bet if it had at least three recorded matches for each character. While MWC did do a few percentage points better, it almost never bet. Not a good solution.

I had a bit more data by this point and had also started to realize that comparing wins and losses both rewarded and penalized popular characters more than it should have. For example, consider the following two character records. The parts in parentheses represent data that my bot has recorded.

Name | Wins : Losses

Adam | WWWWWW(WW) : (L)LL

BenJ | WWWWWW(WWW) : (LL)LLLLLLLLLLLLLL

As you can see, “BenJ” is being rewarded for being popular rather than effective. My next strategy, “Ratio Balance” compared win percentage rather than number of wins. This yielded a fairly significant improvement: 55% accuracy.

Enter Machine Learning

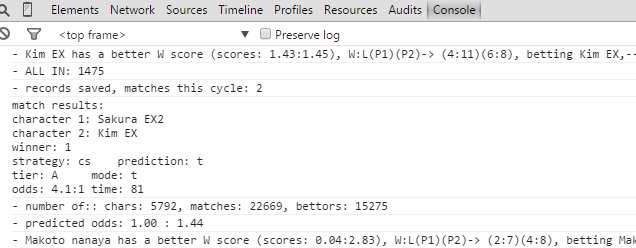

Wanting to apply some of the machine learning material I’d been studying recently, I upgraded the bot to collect more information than just wins and losses. Now, for each match, it recorded match odds, match time (for faster wins and slower losses), the favorite of bettors with premium accounts (who constitute about 5% of total active accounts), and the crowd favorite. The next version of the bot, “Confidence Score” combined all of those features in order to make its decision.

But how to weigh the different features? The problem was a good fit for a genetic algorithm, so I put all of those weights on a chromosome and created a simulator which would go back through all the recorded matches and try out different weighting combinations, selecting for accuracy. The accuracy immediately leapt to 65%! In the days that followed, the chromosome class underwent a lot of changes, but its final form looked like the following.

// confidence weights

oddsWeight : 1.40949

timeWeight : 0.04281

winPercentageWeight : 1.42489

crowdFavorWeight : 0.00035

illumFavorWeight : 0.00211

// confidence sub-weights

// wins and losses for win percentage by tier

wX : 28.47763

wS : 6.91912

wA : 5.42984

wB : 0.68912

wP : 0.00269

wU : 0.05827

lX : 1.39220

lS : 2

lA : 4.76105

lB : 0.00054

lP : 0.05364

lU : 0.67660

// odds by tier

oX : 62.16219

oS : 2.40280

oA : 0.00143

oB : 15.06011

oP : 0.00200

oU : 0.17487

// match times by tier

wtX : 0.04751

wtS : 2.02764

wtA : 4.78510

wtB : 37.18573

wtP : 0.07104

wtU : 2.45965

ltX : 0.22788

ltS : 6.50902

ltA : 0.15164

ltB : 0.00147

ltP : 0.00193

ltU : 0.01134

I also had the bot change its betting amount based on its confidence in its choice. This, more than any of the features of the data, turned out to be really important. It caused my winnings to stop fluctuating around the $20,000 mark and to instead fluctuate around the $300,000 mark. Then, I had the simulator select for (money * accuracy) instead of just accuracy, and my virtual wealth moved up into the $450,000 range despite the accuracy decreasing slightly. Of course by this time, I’d realized that most of the 400,000 accounts on SaltyBet were inactive, so I wasn’t really in the 1% yet.

Analysis

Before we move on, let’s take another look at that chromosome. There are a number of interesting facts which emerge, which aren’t intuitively obvious. For this reason, I’ve come to enjoy watching the chromosomes evolve more than watching the actual matches.

- Win percentage dominates everything else. I actually put in an anti-domination measure in the simulator which penalized chromosomes by 95% which had one of the weights worth more than all of the others combined. (Doing so minimized the damage when the bot guessed wrong. Surprisingly, this didn’t hurt the accuracy much, small fractions of a percent.)

- Crowd favor and premium account favor are completely worthless.

- Though success and failure do count differently in different tiers, the distribution is far from uniform. For example, wins and odds in X tier count an order of magnitude more than almost everything else, but match times in X tier aren’t that important.

- Not shown here is that the chromosome formerly included confidence nerfs, conditions like “both characters are winners/losers” or “not enough information” which would decrease the betting amount if triggered, and also switches to turn the nerfs on and off (like epigenetic DNA). The simulator consistently turned off all of my nerfs, so I got rid of most of them. The true face of non-risk-aversion.

You’ll also notice that there’s a tier, “U”, on the chromosome which I didn’t mention before. Due to some quirks of the site, my information gathering isn’t perfect. So, “U” stands for Unknown.

Why Not Also Track Humans?

I had learned a ton about JavaScript (like closures and hoisting!) and browser extensions, and was pretty happy with the project. My bot swung wildly between $300,000 and $600,000 with 63% accuracy. I started to wonder how accurate the other players were, and realized I could also track them. So I did. I collected accuracy statistics on players for 30 days. This unearthed a few more interesting facts.

- At 63% accuracy, my bot was in the 95th percentile. The most accurate bettor on the site bets at about 80%.

- Players with premium accounts bet 6% more accurately than free players, on average.

- Judging from the number of bets made, there were obviously other bots on the site.

- Some players who were significantly richer than I was bet with much lower accuracy. One of them bet with 33% accuracy.

That last item in particular interested me. How could this be? … Upsets! I went back to the simulator and pulled out more statistics. By this time, I had quite a bit of data.

- The average odds on an upset were 3:1.

- The average odds on a non-upset were 14:1.

- Upsets constituted 23% of all matches.

- My bot was able to call 41% of all upsets correctly.

- My bot was able to call 73% of all nonupsets correctly.

(3 * 0.41 * 0.23)+(-1 * .59 * .23)+(0.07 * .73 * .77)+(-1 * .27 * .77) = -0.02

If I switched the bot to pure flat bet amounts, it would take a loss, but it would almost break even! With just a little bit of tweaking, it might be able to get into the black in a stable, linear way, rather than all the wild swings around a threshold. (I was still betting 10% of total winnings at this time.) It also occurred to me, that since my bot was on 24/7, there were lower traffic times during which it could actually move the odds far enough that it would hurt itself. That too would be minimized by flat bets.

I switched the bot over to flat betting amounts based on total winnings. (Meaning, it was allowed to bet $100 until it passed $10,000, then $1000 until it passed $100,000, and so forth). I watched for a while and experimented with different things. What finally seemed to work was applying the confidence score to flat amounts, rather than the original 10% of totals. (So, the amount to bet was now (flat_amount * confidence)). That did the trick: my losses were instantly cut by 10%, which meant I was getting a penny back on every dollar bet, on average. My rank has been steadily rising ever since. No more wild swings or caps, just slow wealth accumulation.

I don’t work on the bot anymore, but I leave it on, 24/7. I come home from work and see another $100,000 accrued, and smile. Sometimes it drops $100,000 instead, but the dips are always temporary. Since I started writing this article, it has accumulated $40,000. If you care to try it out yourself, or perhaps improve it somehow, please fork it. It’s on Github.